How LLMs Use Outdated Pages (and How to Prevent It)

Summary (TL;DR)

Large Language Models (LLMs) frequently return outdated information because their core knowledge is locked to the data cut-off date of their last training cycle. This presents reputational, legal, and SEO risks for brands if users or machines surface misleading content in search or AI-driven experiences. Practical prevention strategies for SEO and content teams include rigorous content refresh protocols, optimized technical signals (like canonical tags and sitemaps), and leveraging retrieval-augmented generation (RAG) or knowledge editing technologies. Proactive detection and management of stale pages are essential to mitigate risk and maintain authority—especially as LLM-powered interfaces proliferate across search and digital channels.

---

Introduction

The surge in adoption of LLM-powered tools—both for general users and business-facing applications—has fundamentally changed how content is consumed, synthesized, and represented online. Yet, the Achilles heel of even the most advanced LLMs is their reliance on static training data, which quickly becomes obsolete. This puts marketing and SEO consultants in a position where outdated site pages aren't just an organic ranking risk, but also a potential vector for misinformation in the AI era.

This post explores:

- Why LLMs use outdated web pages

- The SEO/business impact of stale content being surfaced by generative models

- Practical, scalable tactics to reduce the risk of LLMs amplifying your outdated content

---

How and Why LLMs Use Outdated Pages

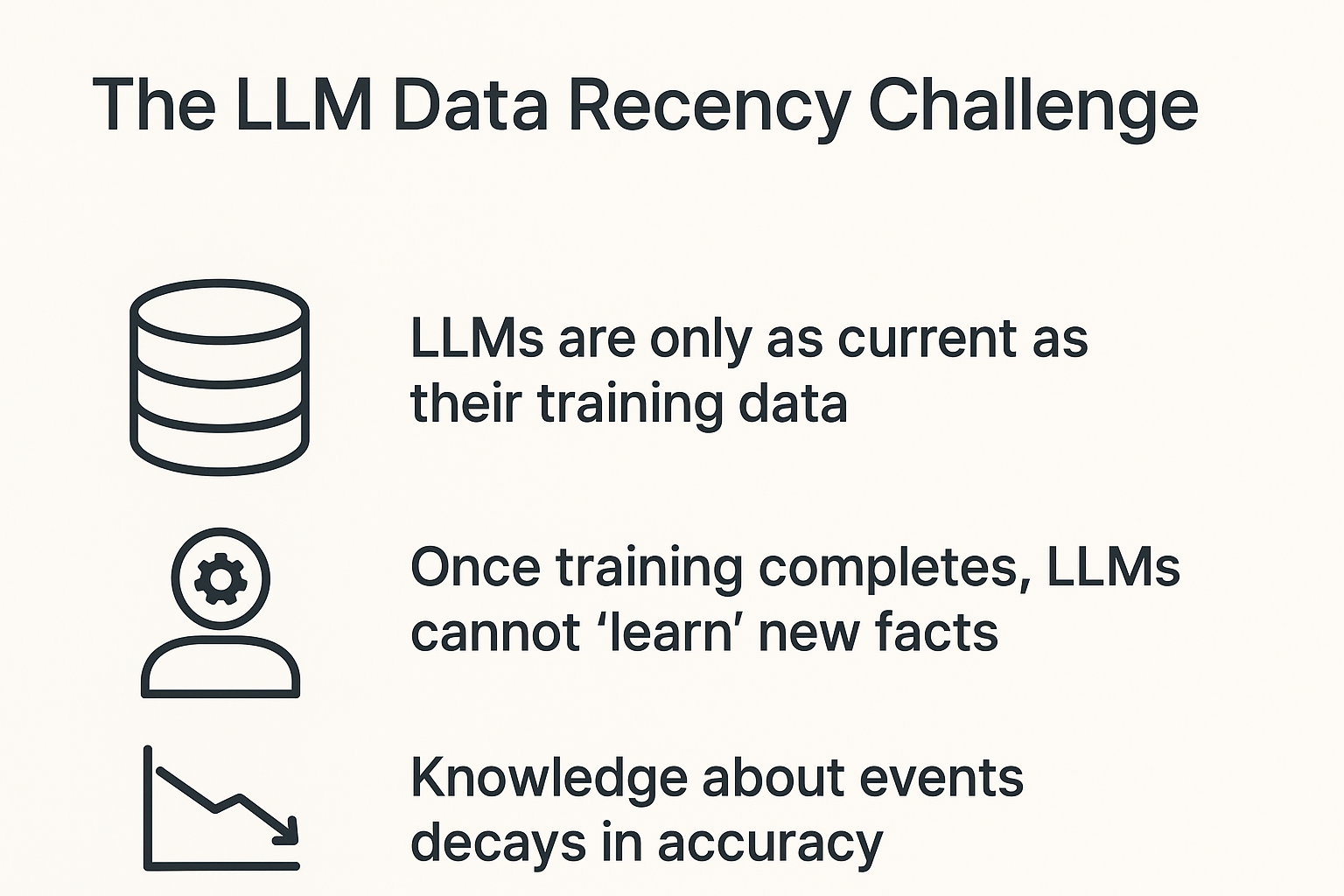

The LLM Data Recency Challenge

- LLMs are only as current as their training data. Most LLMs are trained on massive scraped web datasets, which are only periodically refreshed[[2]](https://www.projectpro.io/article/llm-limitations/1045).

- Once training completes, LLMs cannot "learn" new facts unless retrained/re-fine-tuned—which is technically complex, resource-intensive, and not real-time[[2]](https://www.projectpro.io/article/llm-limitations/1045).

- Knowledge about events, products, or organizations quickly decays in accuracy. For instance, a model trained up to 2022 will still consider CEO or event data from pre-2022 as current—even if leadership has changed or products have been discontinued[[1]](https://arxiv.org/html/2404.08700v1)[[2]](https://www.projectpro.io/article/llm-limitations/1045).

Key Risks for Web Content Owners

| SEO/Brand Impact | Description |

|------------------------------------------|------------------------------------------------------------------------|

| Reputation damage | LLMs answer with your outdated info (e.g., old pricing, discontinued products) in AI chat or search[[4]](https://www.deepchecks.com/risks-of-large-language-models/). |

| Legal/compliance risk | Outdated legal disclaimers, medical advice, or regulated content can be repeated and cause violations[[4]](https://www.deepchecks.com/risks-of-large-language-models/). |

| Lost clicks/conversions | Users who get wrong info from LLM chats may bounce, distrust, or never reach your updated site |

| Organic ranking erosion | Search engines may demote persistently outdated pages, reducing visibility[[4]](https://www.deepchecks.com/risks-of-large-language-models/). |

Real-World Examples

- In benchmarking studies, only the newest models answered correctly about a soccer player's current team; most models returned obsolete data because they hadn’t seen recent updates[[1]](https://arxiv.org/html/2404.08700v1).

- Product pages showing unavailable SKUs were cited in generative search summaries months after products were discontinued.

---

Detection: Identifying Outdated Pages LLMs Might Surface

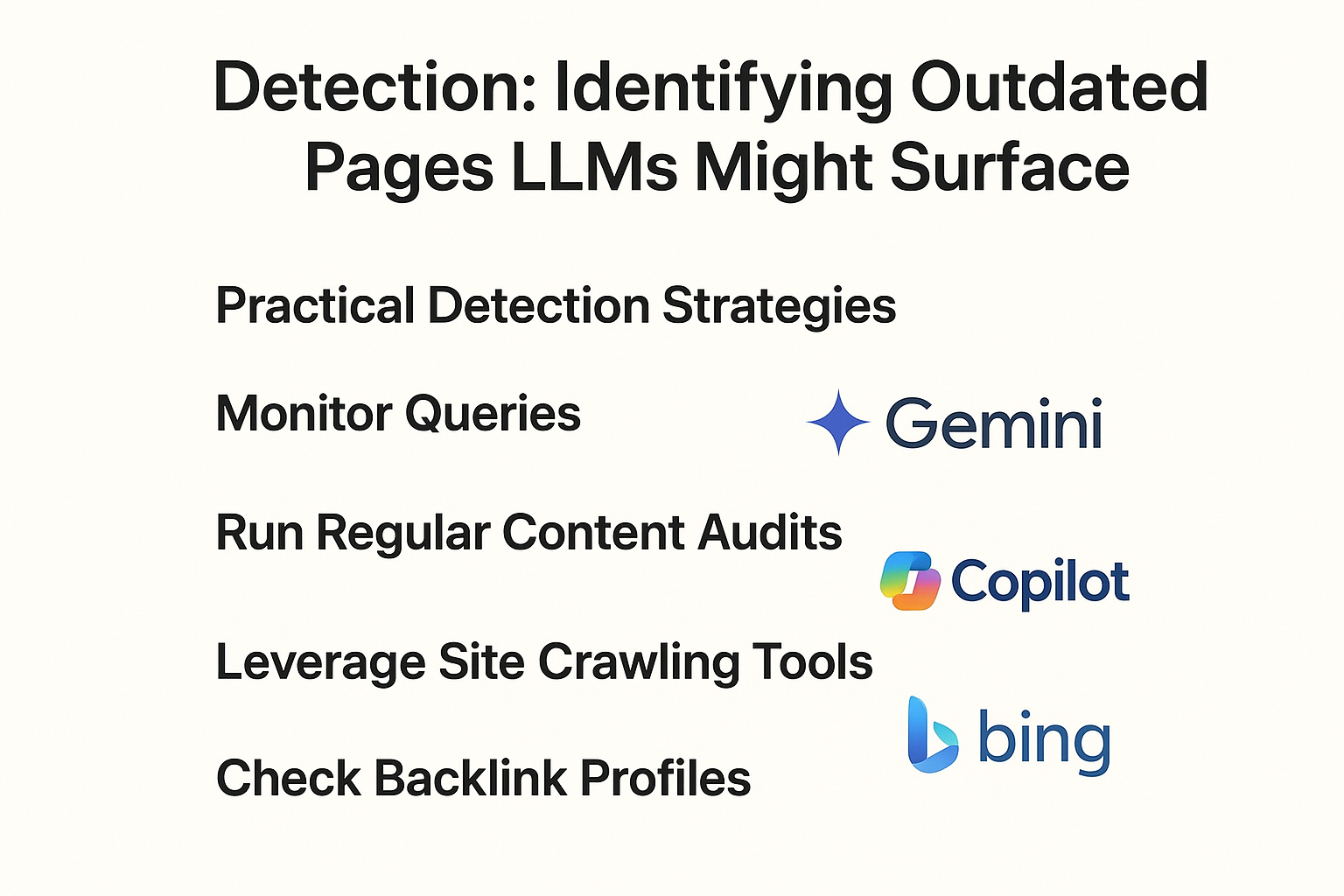

Practical Detection Strategies

- Monitor queries that surface outdated facts in AI search or SGE. Use Search Labs, Bing Copilot, or Gemini snippets to identify where your content is referenced and evaluate answers for currency[[3]](https://hai.stanford.edu/news/how-do-we-fix-and-update-large-language-models).

- Run regular content audits for time-sensitive facts. Focus on leadership names, product specs, event dates, and regulatory details[[4]](https://www.deepchecks.com/risks-of-large-language-models/).

- Leverage site crawling tools (Screaming Frog, Ahrefs, SEMrush, etc.) to flag pages not updated in over 12-18 months.

- Check backlink profiles: Old popular pages often remain in LLM datasets; prioritize reviewing and updating any high-DR legacy content.

---

How to Prevent LLMs from Using Outdated Pages

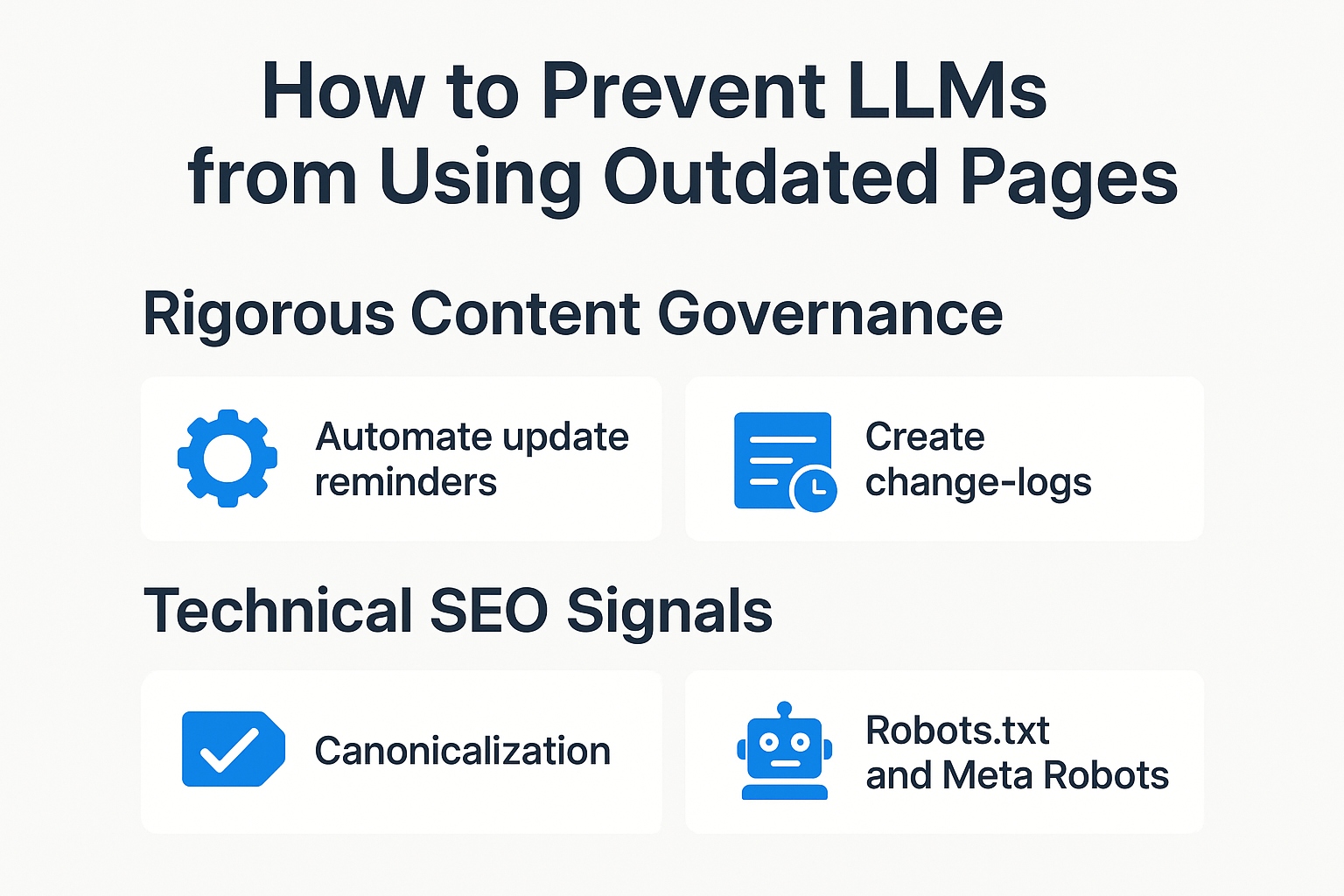

1. Rigorous Content Governance

- Automate update reminders for any time-sensitive content—build internal review cycles at 6- or 12-month intervals.

- Create change-logs on important pages (e.g., “Last updated on” in the schema/visible copy). This signals freshness to LLM dataset builders and crawlers[[4]](https://www.deepchecks.com/risks-of-large-language-models/).

2. Technical SEO Signals

- Canonicalization: Use canonical tags to consolidate preferred URLs. Legacy or outdated URLs should be canonicalized to the latest version so LLMs/information extractors fetch the current canonical page[[6]](https://dl.acm.org/doi/10.1145/3726302.3730254).

- Robots.txt and Meta Robots: Block outdated pages from bots using

noindex, nofollowuntil they’re refreshed or retired[[4]](https://www.deepchecks.com/risks-of-large-language-models/). - XML Sitemaps: Regularly update sitemap lastmod properties. Some data miners and LLMs use sitemaps for seed links; accurate

lastmodsignals recency[[4]](https://www.deepchecks.com/risks-of-large-language-models/). - Structured Data/Schema Markup: Use properties like

dateModifiedto help information extractors and LLM trainers detect fresh content[[4]](https://www.deepchecks.com/risks-of-large-language-models/).

3. Retrieval-Augmented Generation (RAG) & Knowledge Editing

- Deploy RAG systems for your own chatbots or search: Instead of relying solely on pre-trained models, RAG architectures look up current web pages or databases in real time[[1]](https://arxiv.org/html/2404.08700v1)[[3]](https://hai.stanford.edu/news/how-do-we-fix-and-update-large-language-models).

- Open source LLMs and specialized knowledge editing tools can surgically update facts with parameter-efficient tuning—though this is complex and resource-heavy outside enterprise environments[[1]](https://arxiv.org/html/2404.08700v1)[[3]](https://hai.stanford.edu/news/how-do-we-fix-and-update-large-language-models).

4. Redirects and Content Decay Management

- 301 Redirect outdated URLs to current counterparts. LLMs trained on live site crawls will follow permanent redirects.

- Retire or consolidate thin, legacy pages with outdated info—especially for high-ticket, regulated, or evergreen content categories.

---

Implementation Roadmap

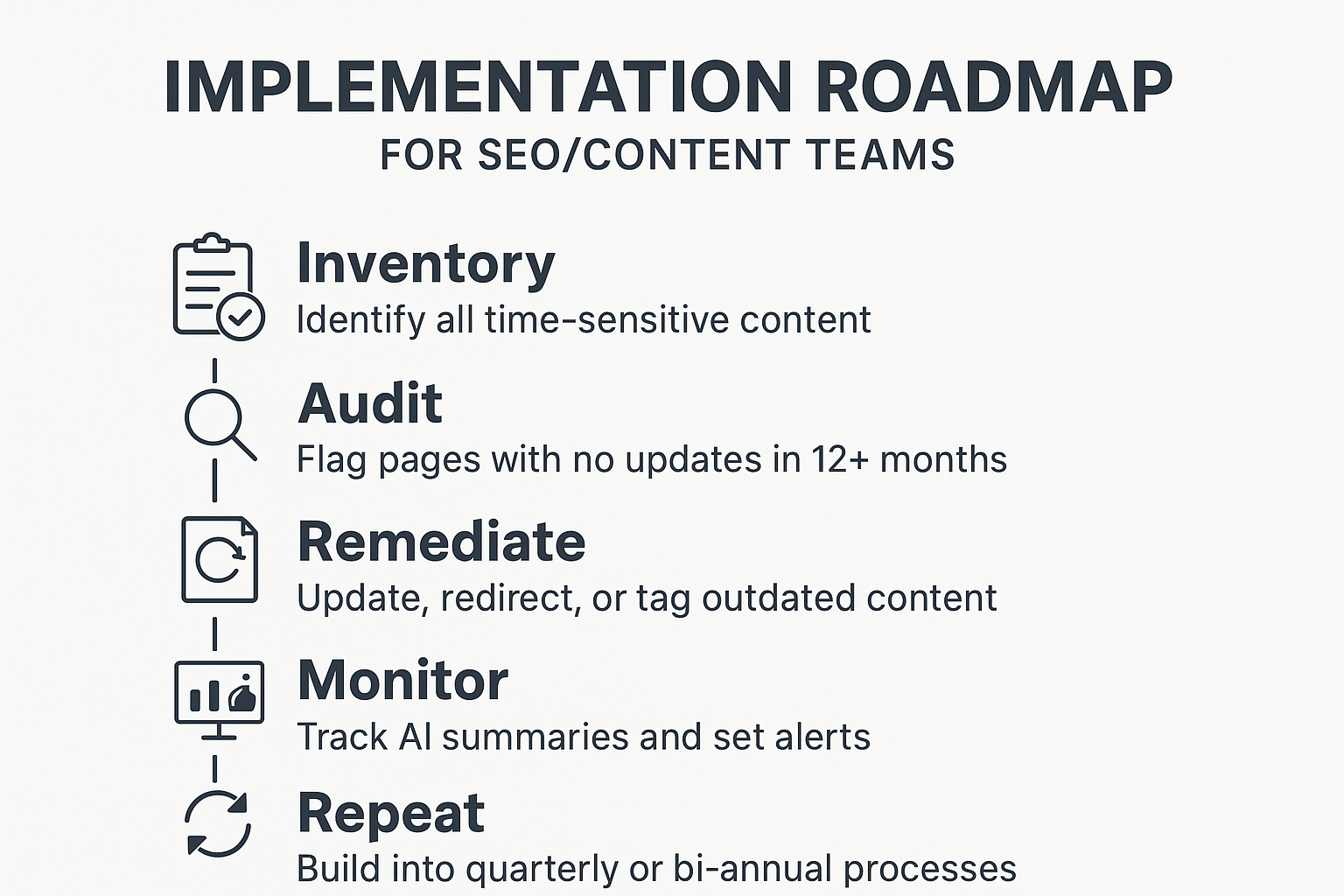

Actionable Workflow for SEO/Content Teams

- Inventory: Identify all time-sensitive content (e.g., products, events, financial/legal info).

- Audit: Use crawl/indexation/data tools to flag pages with no updates in 12+ months.

- Remediate:

- Update, redirect, or canonically tag outdated content.

- Add

dateModified in schema and visible copy.- Monitor:

- Track AI summaries and chat answers referencing your site.

- Set automated alerts for mentions of your brand/content in AI search tools.

- Repeat: Build these steps into quarterly or bi-annual processes.

---

Conclusion/Key Takeaways

- LLMs amplify outdated information if not actively managed at the source—this presents real SEO and business risks in the era of AI-powered search and content discovery.

- Consistent, automated content review processes are critical to defending against stale content propagation.

- Technical signals (canonical tags, schema, sitemaps, robots instructions) make it easier for both LLMs and traditional search engines to identify and surface only your most accurate pages.

- Future-proof your content estate by retiring, redirecting, or updating old information and making freshness signals visible and machine-readable.

- Prepare for an ecosystem where your content’s recency is evaluated by both crawlers and LLMs—optimize accordingly.

---

FAQs

Q: Why don’t LLMs just crawl the web for the latest info?

A: LLMs are trained on static datasets due to cost and compute constraints. Real-time updating is only possible via hybrid retrieval (RAG) or retraining, which most commercial models do infrequently[[2]](https://www.projectpro.io/article/llm-limitations/1045)[[1]](https://arxiv.org/html/2404.08700v1)[[3]](https://hai.stanford.edu/news/how-do-we-fix-and-update-large-language-models).

Q: Will using “noindex” keep a page out of LLMs?

A: “Noindex” prevents most crawlers and likely reduces future inclusion in training datasets, but cannot remove content already present in historic LLM training data[[4]](https://www.deepchecks.com/risks-of-large-language-models/).

Q: How often should sites audit for outdated pages?

A: At minimum, every 6-12 months for time-sensitive content. High-impact brands should monitor brand mentions in AI search and generative answers continuously.

Q: Are all LLMs equally outdated?

A: No. Release cycles vary, and some LLMs are more up-to-date than others. Testing with real-time queries via benchmarks can reveal recency gaps[[1]](https://arxiv.org/html/2404.08700v1)[[5]](https://aclanthology.org/2025.naacl-long.381.pdf).

---

Citations

- Is Your LLM Outdated? Benchmarking LLMs & Alignment Algorithms for Time-Sensitive Knowledge - arXiv

- 10 Biggest Limitations of Large Language Models - ProjectPro

- How Do We Fix and Update Large Language Models? | Stanford HAI

- Risks of Large Language Models: A comprehensive guide - DeepChecks

- Is Your LLM Outdated? A Deep Look at Temporal Generalization - ACL Anthology

- Text Obsoleteness Detection using Large Language Models - ACM

- Large(r) language models: Too big to fail? - IBM