Product Page Patterns That LLMs Prefer

Product Page Patterns That LLMs Prefer

TL;DR: Large language models process product pages through vector embeddings and semantic understanding rather than keyword matching. To optimize for LLM visibility, implement comprehensive JSON-LD schema markup with 30+ product properties, ensure JavaScript content is prerendered for AI crawlers, structure content with clear heading hierarchies, and maintain fresh data through automated updates. Technical implementation requires validation across multiple AI systems, baseline metric tracking, and continuous content optimization cycles.

Introduction

The shift from traditional search engines to AI-powered recommendations has fundamentally altered how product pages must be structured. When consumers ask ChatGPT, Perplexity, or Google SGE for product recommendations, these systems don't scan for keywords. Instead, they process product information through mathematical representations called vector embeddings, analyze multi-modal data (text, images, structured data), and make semantic connections between related concepts[[1]](https://prerender.io/blog/llm-product-discovery/).

For marketing and SEO consultants managing ecommerce clients, this represents a critical pivot point. LLMs use Retrieval-Augmented Generation (RAG) systems to identify and recommend products, meaning your technical implementation directly impacts whether your clients' products appear in AI-generated recommendations at all[[1]](https://prerender.io/blog/llm-product-discovery/). This guide provides the exact patterns, technical specifications, and validation processes required to position product pages for maximum LLM visibility.

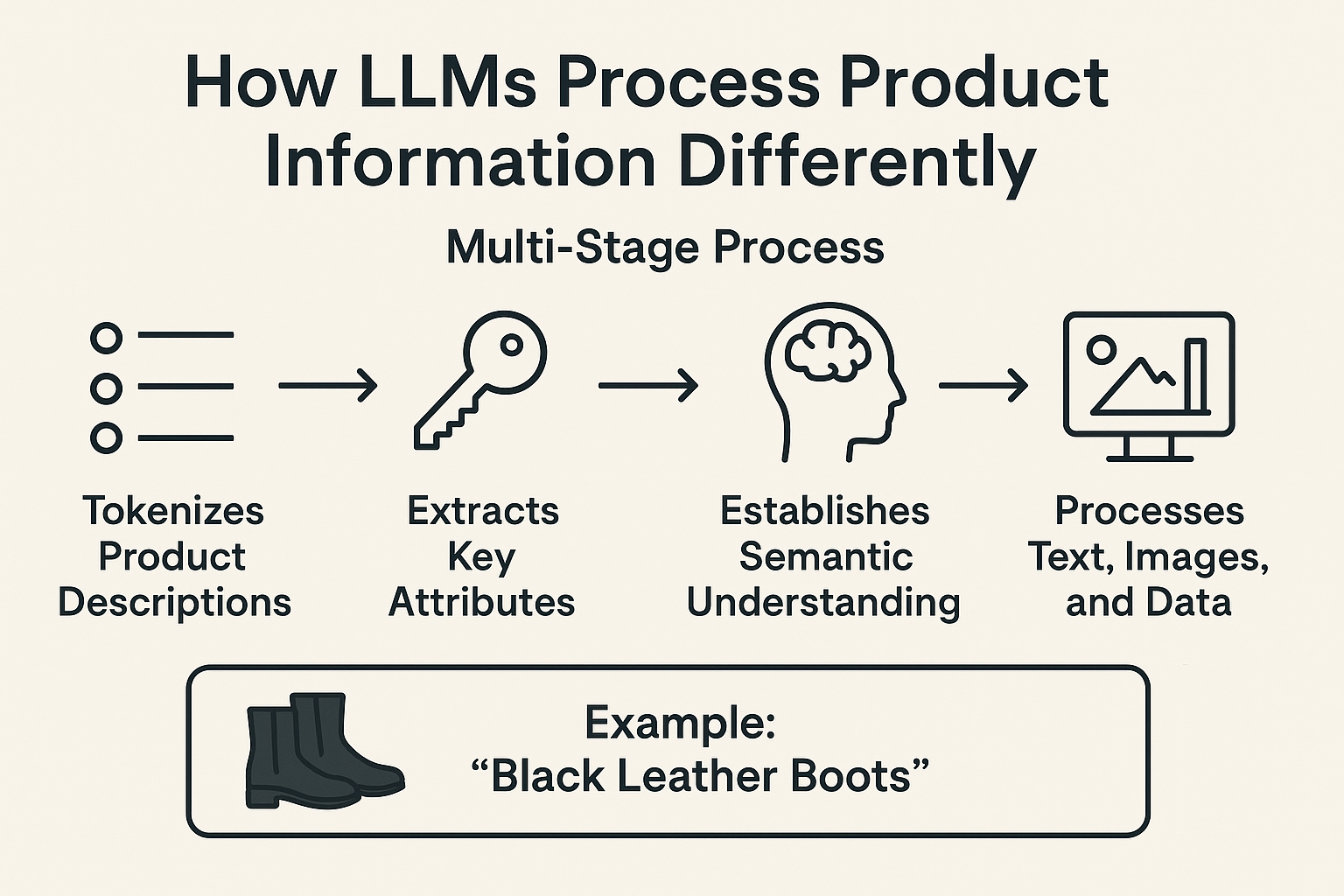

How LLMs Process Product Information Differently

LLMs break down product pages through a multi-stage process that differs fundamentally from traditional search crawling. The system tokenizes product descriptions and user queries, extracts key attributes like brand and category, establishes semantic understanding by connecting related concepts, and processes text, images, and structured data simultaneously[[1]](https://prerender.io/blog/llm-product-discovery/).

Consider a practical example. When a user queries "black leather boots," the LLM doesn't simply match those three words. It processes the relationship between color, material, product type, seasonal use, and style preferences. The system automatically connects "hiking boots" with "outdoor activities" and "waterproof materials" without requiring explicit user specification[[1]](https://prerender.io/blog/llm-product-discovery/).

This semantic processing capability means your product pages must provide contextual signals beyond basic product specifications. The structure, markup, and content organization directly influence whether an LLM can accurately represent your products in its recommendations.

Critical Technical Foundation: JavaScript and Crawler Access

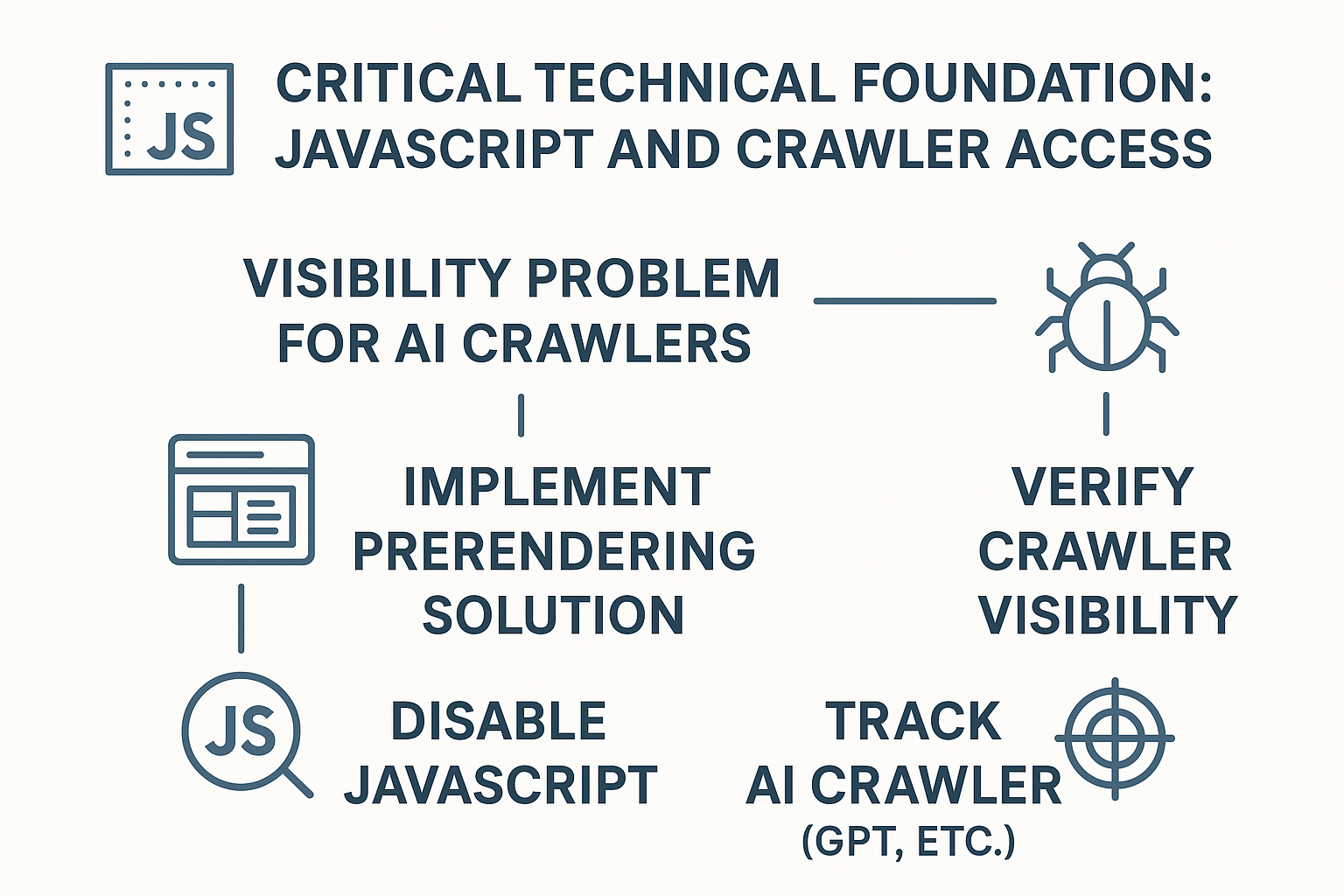

Many ecommerce platforms rely heavily on JavaScript to display product information, creating an immediate visibility problem for AI crawlers. The first critical step is implementing a prerendering solution that serves HTML versions of your content to AI crawlers[[1]](https://prerender.io/blog/llm-product-discovery/).

Without this foundation, all subsequent optimization efforts fail because AI crawlers cannot access your product data. To verify crawler visibility, disable JavaScript in your browser and confirm that product names, prices, and descriptions remain displayed. If they disappear, AI crawlers cannot see them either[[1]](https://prerender.io/blog/llm-product-discovery/).

After implementing prerendering, create a comprehensive audit spreadsheet tracking specific AI crawlers (GPT, Claude, Bard), document exactly what content they can and cannot see, and establish baseline metrics for comparison. Test your top 20 product pages across different AI systems to identify patterns in what gets recognized versus missed[[1]](https://prerender.io/blog/llm-product-discovery/).

Comprehensive Schema Markup Implementation

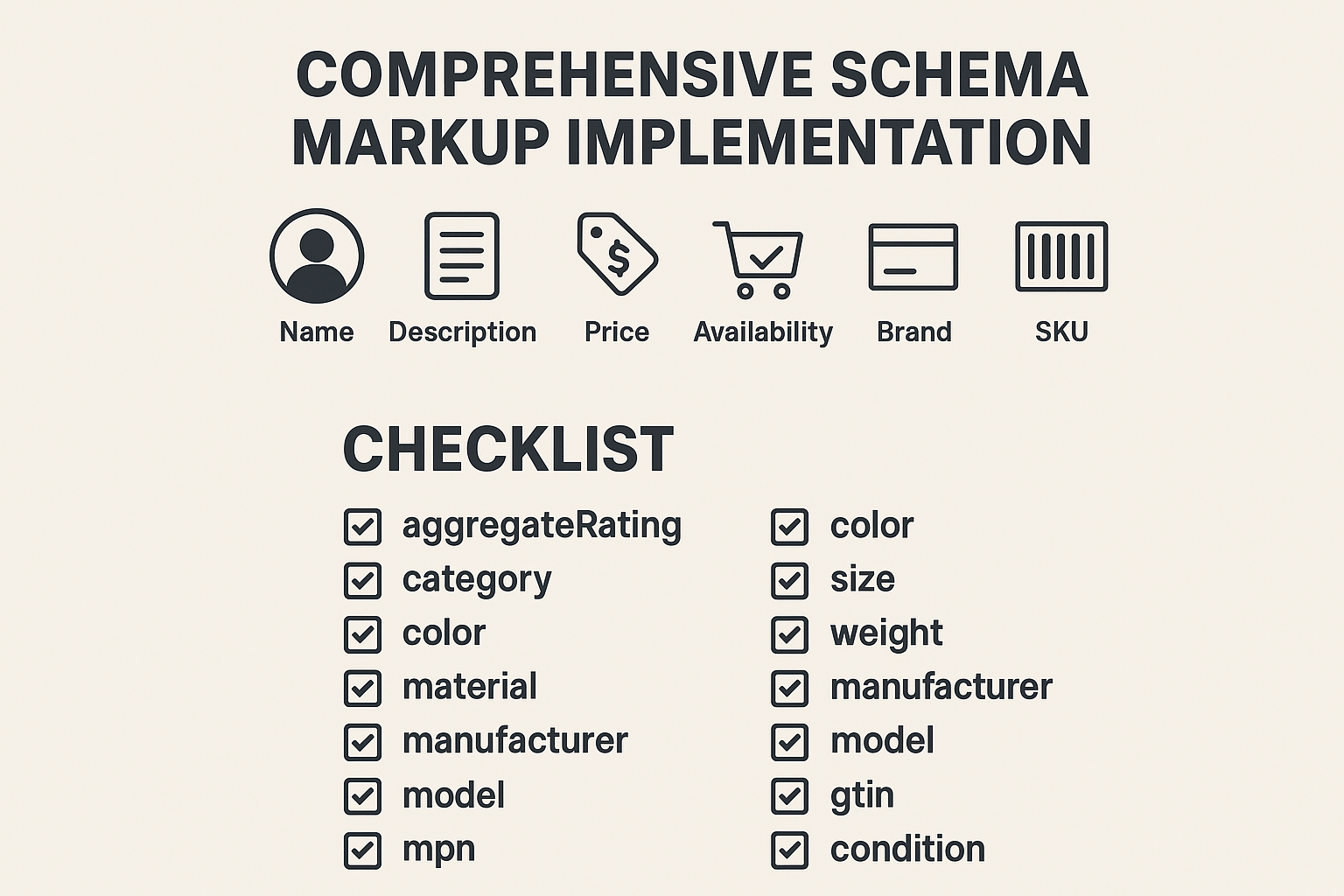

Schema markup serves as the primary communication layer between your product data and LLM systems. Add JSON-LD markup to every product page, implementing it in the section rather than scattered microdata so the full markup loads even if scripts fail[[3]](https://seoprofy.com/blog/llm-seo/).

Core Schema Properties

At minimum, include name, description, price, availability, brand, and SKU in your schema markup. However, LLM optimization requires going beyond basics. Create a comprehensive schema property checklist with 30+ properties, including aggregateRating, category, color, size, weight, material, manufacturer, model, gtin, mpn, and condition[[1]](https://prerender.io/blog/llm-product-discovery/).

Go beyond basic product schema by implementing related schemas including Review, Offer, Organization, and BreadcrumbList[[1]](https://prerender.io/blog/llm-product-discovery/). This interconnected schema structure provides LLMs with complete contextual understanding of your products within your broader catalog and brand ecosystem.

Schema Validation Process

Use Google's Rich Results Tool to check for errors and fix anything showing as invalid. Set up automated daily validation checks using tools like Schema Markup Validator API, create alerts for any validation failures, and maintain a validation score dashboard[[1]](https://prerender.io/blog/llm-product-discovery/).

Validate in both Google Rich Results Test and the Schema.org Playground, as both tools flag missing or deprecated properties[[3]](https://seoprofy.com/blog/llm-seo/). Run URL Inspection and test the live URL in Search Console, then request indexing to push the updated markup into Google's index[[3]](https://seoprofy.com/blog/llm-seo/).

Here's a complete FAQ schema implementation example[[2]](https://www.m8l.com/blog/llm-search-optimization-how-to-make-your-website-visible-to-ai):

``json`

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [{

"@type": "Question",

"name": "What is LLM Search Optimization?",

"acceptedAnswer": {

"@type": "Answer",

"text": "LLM Search Optimization is the practice of structuring content so that AI language models can understand, retrieve, and cite your website when answering relevant questions."

}

}]

}

Content Structure Patterns for Maximum LLM Recognition

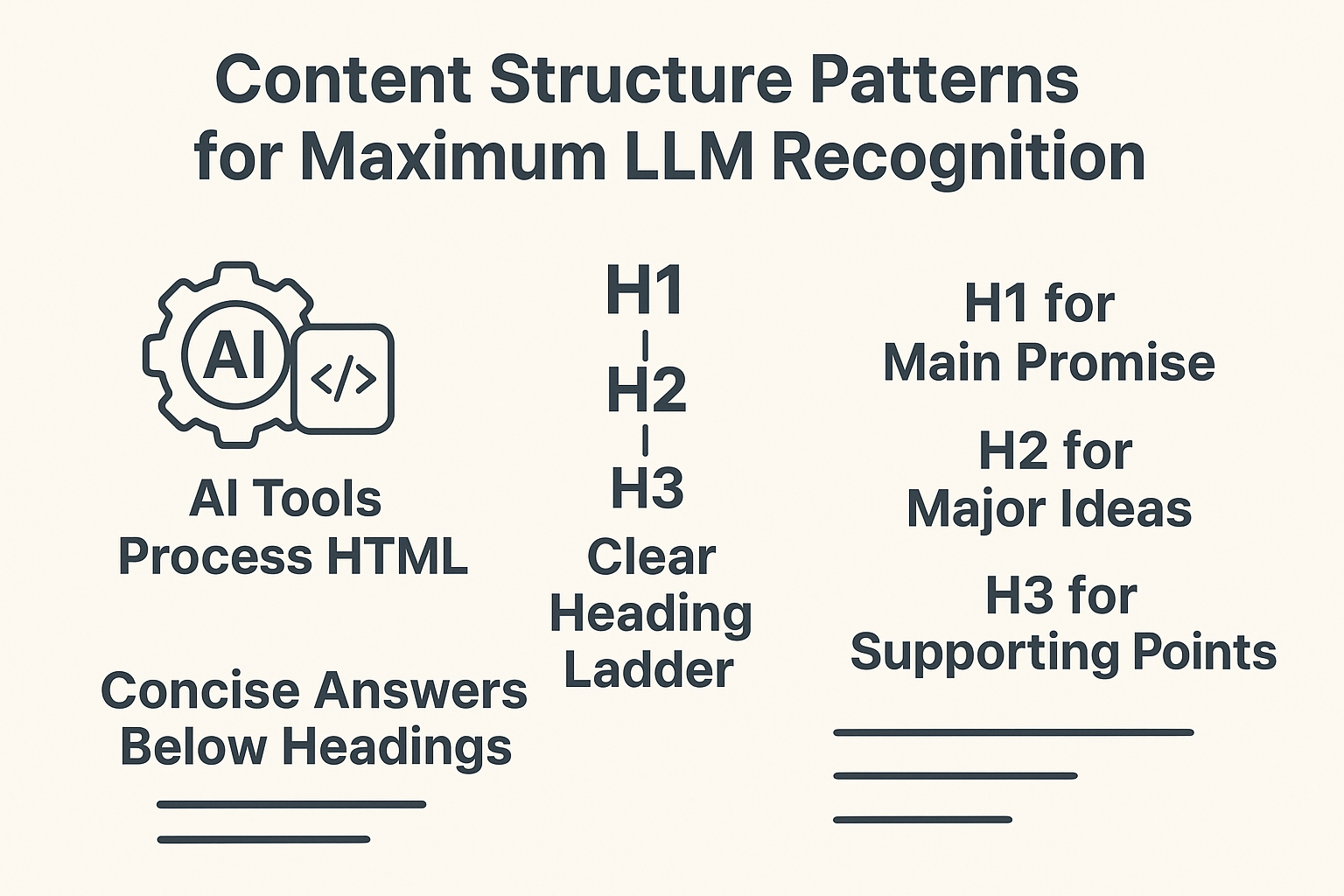

AI tools process the full HTML, outline the content structure, and decide which parts are trustworthy enough to quote. If your page is chaotic, buried in styled

Heading Hierarchy Standards

Build a clear heading ladder starting with one H1 that states the main promise, H2 blocks for each major idea, and H3 elements for supporting points. Keep headings short, descriptive, and front-loaded with the focus phrase. Turn headings into the very questions the page answers[[3]](https://seoprofy.com/blog/llm-seo/).

Place a brief, direct answer to the section's main question directly beneath each heading. Expand with concise support including short pros, cons, and clear "X vs Y" comparisons when the query calls for it[[3]](https://seoprofy.com/blog/llm-seo/). This structure mirrors the Featured Snippet optimization playbook but targets AI-generated answers as the primary outcome.

Content Format Optimization

Format content with lists, tables, and bullet points to increase the chance of being selected for direct answers[[2]](https://www.m8l.com/blog/llm-search-optimization-how-to-make-your-website-visible-to-ai). Provide clear, direct answers to common questions to target zero-click and AI-generated answer boxes[[2]](https://www.m8l.com/blog/llm-search-optimization-how-to-make-your-website-visible-to-ai).

Implement these specific format patterns[[2]](https://www.m8l.com/blog/llm-search-optimization-how-to-make-your-website-visible-to-ai):

- Definition boxes for key terms

- Step-by-step numbered processes

- Comparison tables with clear headers

- Bulleted lists of benefits, features, or considerations

- Pros and cons in parallel columns

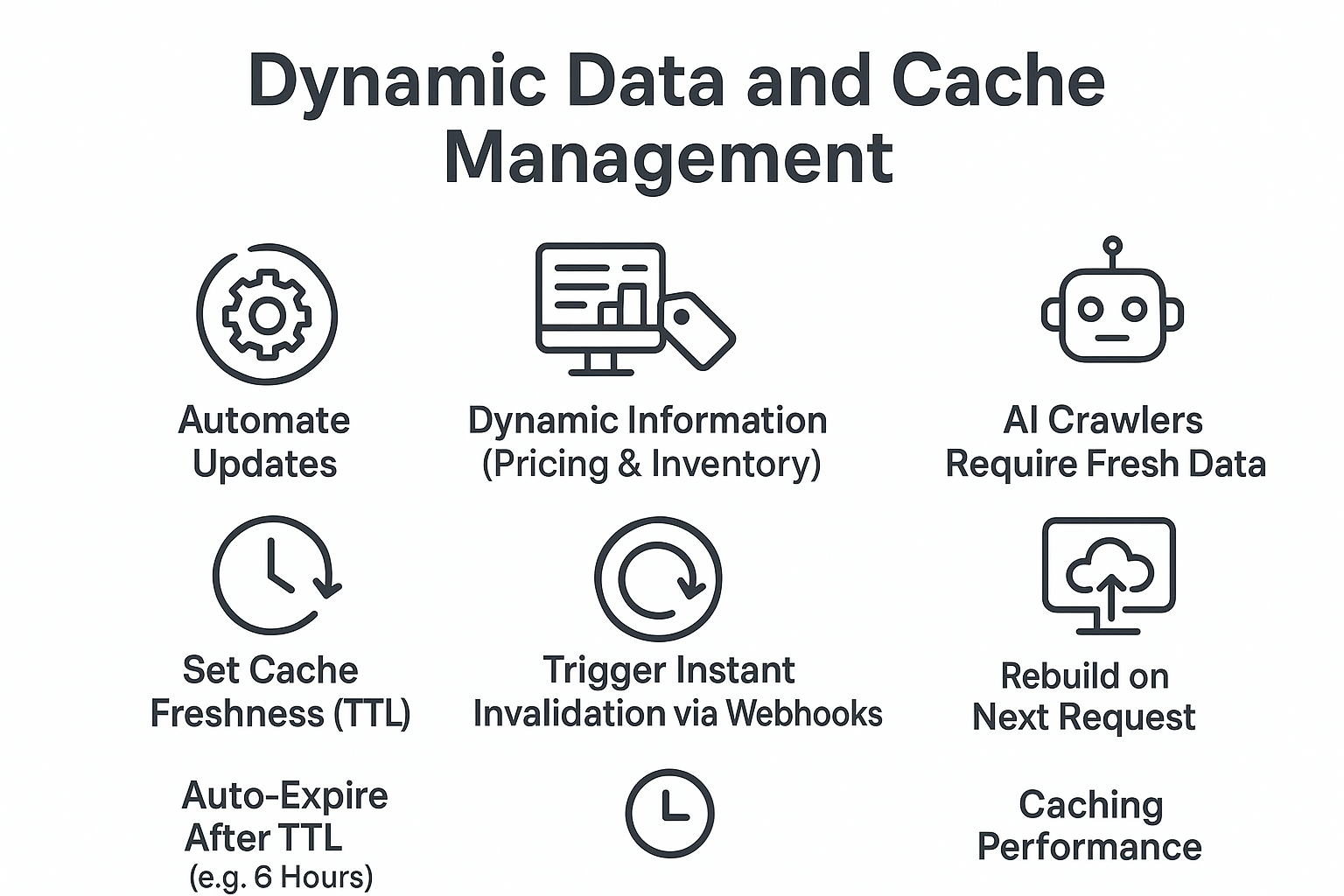

Dynamic Data and Cache Management

Automate updates to your product structured data, especially dynamic information such as pricing and inventory, as AI crawlers require fresh data for accurate results. Set cache freshness (TTL) and trigger instant invalidation via webhooks whenever your CMS or backend updates a product[[1]](https://prerender.io/blog/llm-product-discovery/).

If no webhook fires, the cached HTML should auto-expire after the TTL (for example, 6 hours) and rebuild on the next request. This allows crawlers to always receive the latest data while you still benefit from caching performance[[1]](https://prerender.io/blog/llm-product-discovery/).

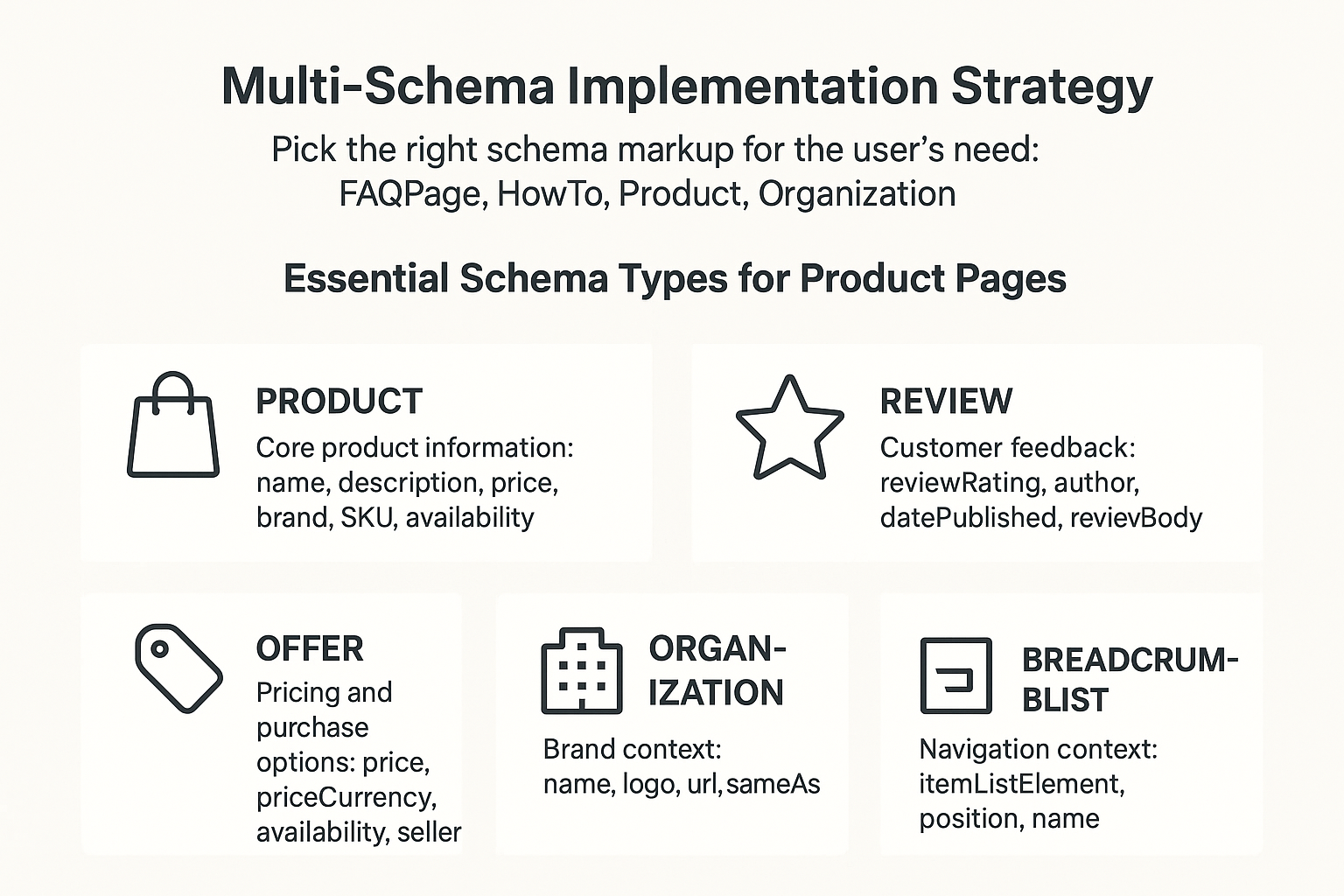

Multi-Schema Implementation Strategy

Pick the right schema markup for the user's need. FAQPage works for common questions, HowTo for step lists, Product for offers, and Organization for brand details[[3]](https://seoprofy.com/blog/llm-seo/). Layer these schemas to provide comprehensive context.

Essential Schema Types for Product Pages

| Schema Type | Purpose | Key Properties |

|-------------|---------|----------------|

| Product | Core product information | name, description, price, brand, SKU, availability |

| Review | Customer feedback | reviewRating, author, datePublished, reviewBody |

| Offer | Pricing and purchase options | price, priceCurrency, availability, seller |

| Organization | Brand context | name, logo, url, sameAs |

| BreadcrumbList | Navigation context | itemListElement, position, name |

Implement A/B testing to measure which schema properties most impact AI recommendation rates[[1]](https://prerender.io/blog/llm-product-discovery/). This data-driven approach identifies the specific markup elements that drive LLM visibility for your product category.

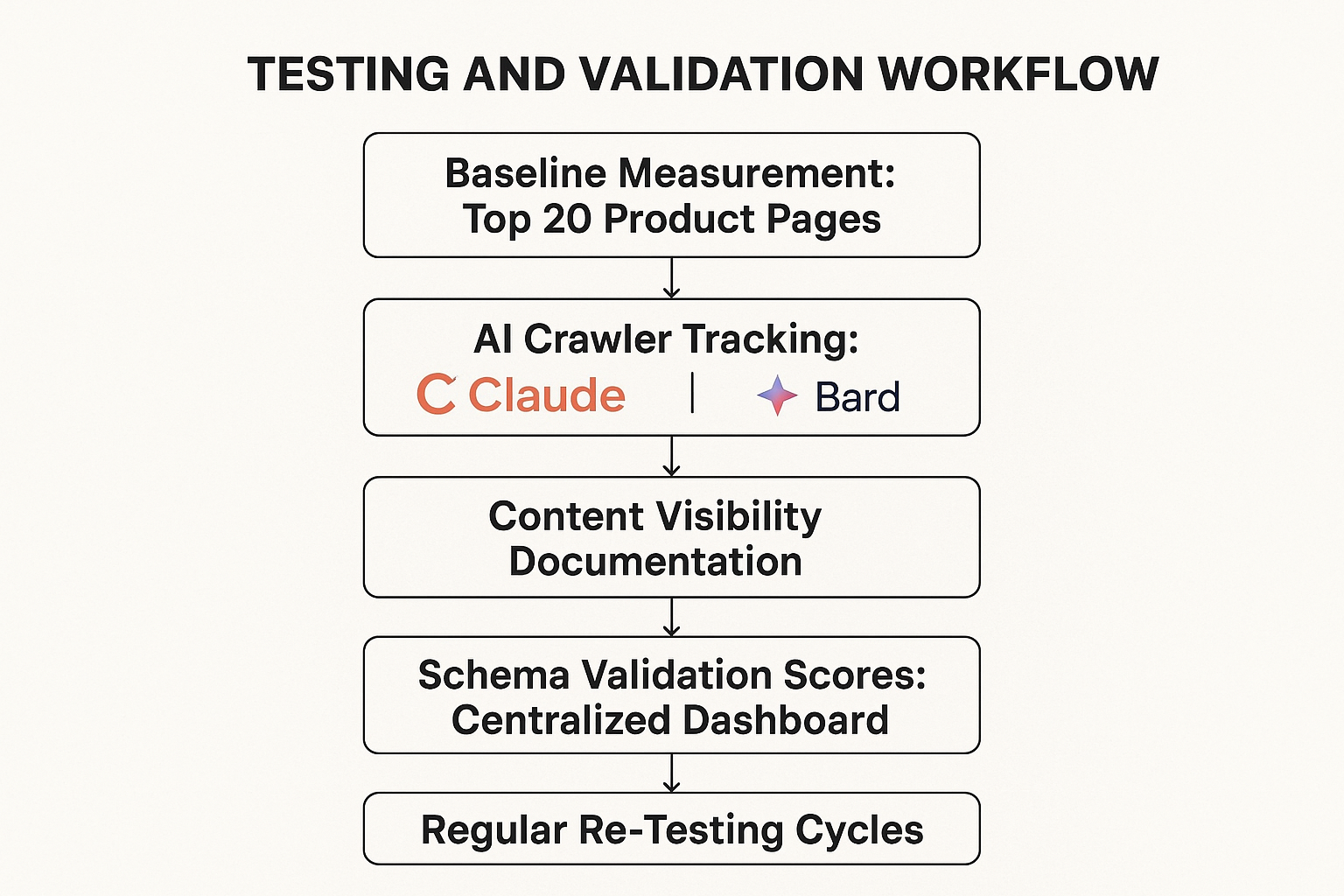

Testing and Validation Workflow

Test markup rendering across different AI systems to ensure compatibility[[1]](https://prerender.io/blog/llm-product-discovery/). Different LLMs may prioritize different schema properties or content structures, requiring systematic testing across platforms.

Create a testing protocol that includes:

- Baseline measurement across top 20 product pages

- AI crawler tracking for GPT, Claude, Bard, and other relevant systems

- Content visibility documentation noting what each system can and cannot see

- Schema validation scores maintained in a centralized dashboard

- Regular re-testing cycles as AI systems update their training data and response patterns

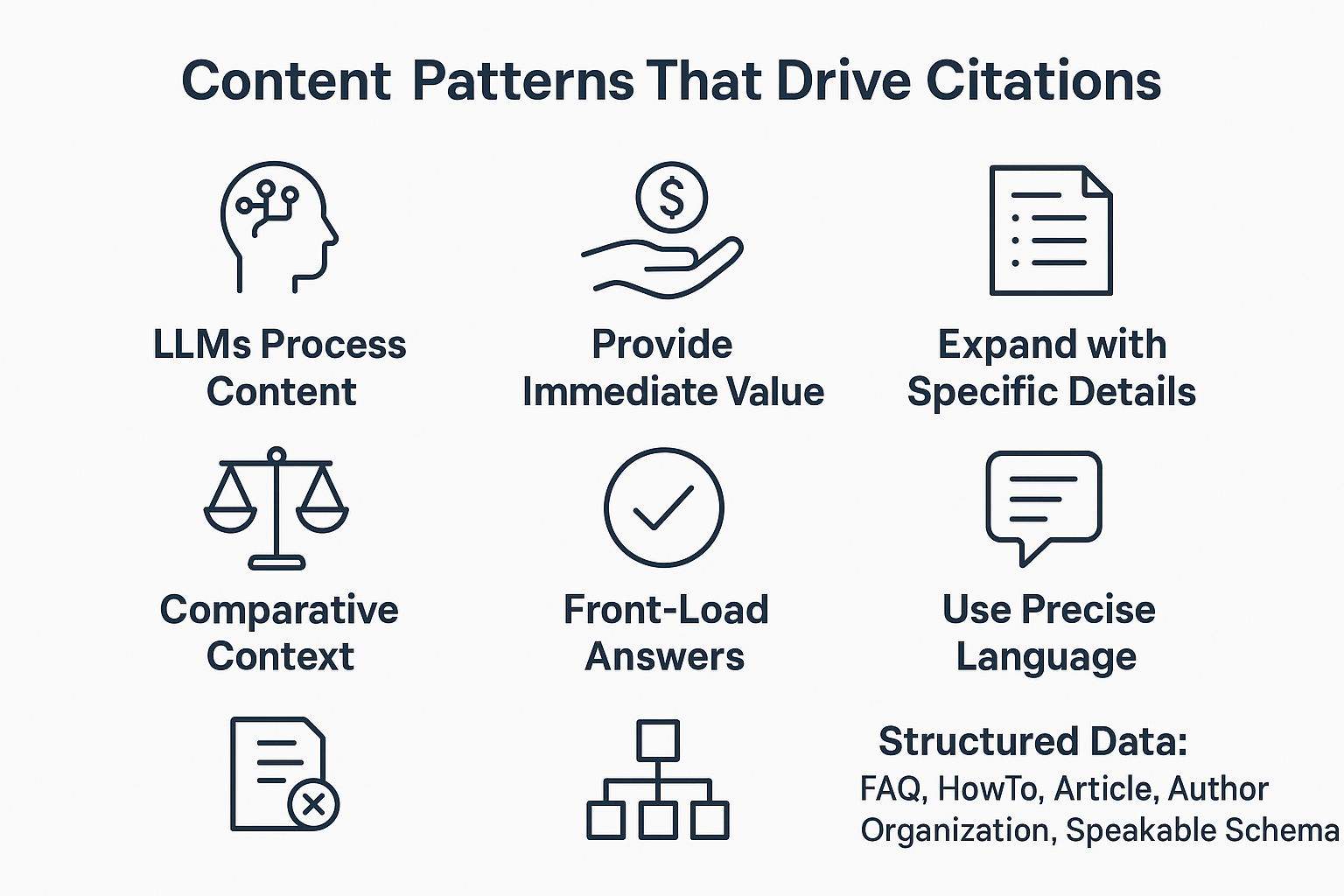

Content Patterns That Drive Citations

LLMs process content looking for clear, authoritative information they can trust and cite. Structure your product descriptions to provide immediate value in the first paragraph, expand with specific details in subsequent sections, and include comparative context when relevant.

The whole optimization process follows a similar playbook to Featured Snippet targeting but points toward AI answers as the primary goal[[3]](https://seoprofy.com/blog/llm-seo/). This means front-loading answers, using precise language, and eliminating vague or marketing-heavy descriptions that don't provide concrete information.

Use structured data to help models and search engines understand your content by adding FAQ schema, HowTo schema, Article and Author schema, Organization schema, and Speakable schema for voice-based answers[[2]](https://www.m8l.com/blog/llm-search-optimization-how-to-make-your-website-visible-to-ai).

Conclusion

Optimizing product pages for LLM visibility requires a systematic approach combining technical implementation, comprehensive schema markup, clear content structure, and continuous validation. The foundation starts with ensuring AI crawlers can access your JavaScript-rendered content through prerendering solutions, followed by implementing 30+ schema properties validated across multiple platforms.

Content structure matters as much as technical implementation. Clear heading hierarchies, direct answers beneath each section, and format optimization through lists, tables, and comparison elements increase citation probability. Dynamic data management through automated cache invalidation ensures LLMs always access current pricing and availability information.

For marketing and SEO consultants, this represents a fundamental shift in optimization strategy. Success requires baseline metric establishment, systematic testing across multiple AI platforms, and regular optimization cycles as AI systems evolve. The technical investment pays dividends through increased product visibility in AI-powered recommendations, directly impacting your clients' revenue potential in an AI-first search landscape.

Key Takeaways

- Technical foundation first: Implement prerendering to ensure AI crawlers can access JavaScript-rendered product content before any other optimization efforts

- Comprehensive schema markup: Include 30+ product properties beyond basic requirements, implementing interconnected schemas (Product, Review, Offer, Organization, BreadcrumbList)

- Structured content hierarchy: Use clear H1/H2/H3 patterns with direct answers beneath each heading, formatted as lists, tables, and comparison elements

- Multi-platform validation: Test markup rendering across GPT, Claude, Bard, and other AI systems with automated daily validation checks

- Dynamic data management: Automate schema updates for pricing and inventory with cache invalidation triggered by webhooks

- Continuous optimization: Establish baseline metrics and conduct regular re-testing cycles as AI systems update their training data

FAQs

How do LLMs process product pages differently than traditional search engines?

LLMs convert product information into vector embeddings (mathematical representations) and use semantic understanding to make connections between related concepts. While traditional search engines match keywords, LLMs process relationships between color, material, product type, seasonal use, and style preferences simultaneously, understanding context without explicit specification[[1]](https://prerender.io/blog/llm-product-discovery/).

What is the minimum schema markup required for LLM optimization?

At minimum, include name, description, price, availability, brand, and SKU in your product schema. However, optimal LLM visibility requires 30+ properties including aggregateRating, category, color, size, weight, material, manufacturer, model, gtin, mpn, and condition. Implement related schemas like Review, Offer, Organization, and BreadcrumbList for complete contextual understanding[[1]](https://prerender.io/blog/llm-product-discovery/).

Why does JavaScript rendering create problems for AI crawlers?

Many ecommerce sites use JavaScript to display product information, but AI crawlers often cannot execute JavaScript to reveal this content. Without prerendering solutions that serve HTML versions to crawlers, product names, prices, and descriptions remain invisible to LLMs, making all other optimization efforts ineffective[[1]](https://prerender.io/blog/llm-product-discovery/).

How often should product schema data be updated?

Automate schema updates for dynamic information like pricing and inventory through webhooks triggered by CMS changes. Set cache freshness (TTL) to expire after 6 hours if no webhook fires, ensuring AI crawlers always receive current data while maintaining caching performance benefits[[1]](https://prerender.io/blog/llm-product-discovery/).

What validation tools should be used for schema markup testing?

Use Google's Rich Results Tool, Schema.org Playground, and Schema Markup Validator API for comprehensive validation. Set up automated daily validation checks, create alerts for validation failures, and maintain a validation score dashboard. Test markup rendering across different AI systems (GPT, Claude, Bard) to ensure cross-platform compatibility[[1]](https://prerender.io/blog/llm-product-discovery/)[[3]](https://seoprofy.com/blog/llm-seo/).

How does content structure impact LLM citation rates?

LLMs prioritize content with clear heading hierarchies (H1 for main promise, H2 for major ideas, H3 for supporting points) and direct answers placed beneath each heading. Pages structured with lists, tables, bullet points, and comparison elements increase citation probability because they're easier for models to process and quote[[2]](https://www.m8l.com/blog/llm-search-optimization-how-to-make-your-website-visible-to-ai)[[3]](https://seoprofy.com/blog/llm-seo/).